I have been working with photogrammetry to capture objects for around 5 years now. Its one of my favorite classes I teach at the community college I work at. You rarely get to do a lot of physical work when you create games. There’s something comfy about being able to interact with your subject and set up the lights and the physical camera that you don’t get when you are usually stuck doing art on a screen. I have a home studio set up to capture assets. Its usually much more cluttered than this image.

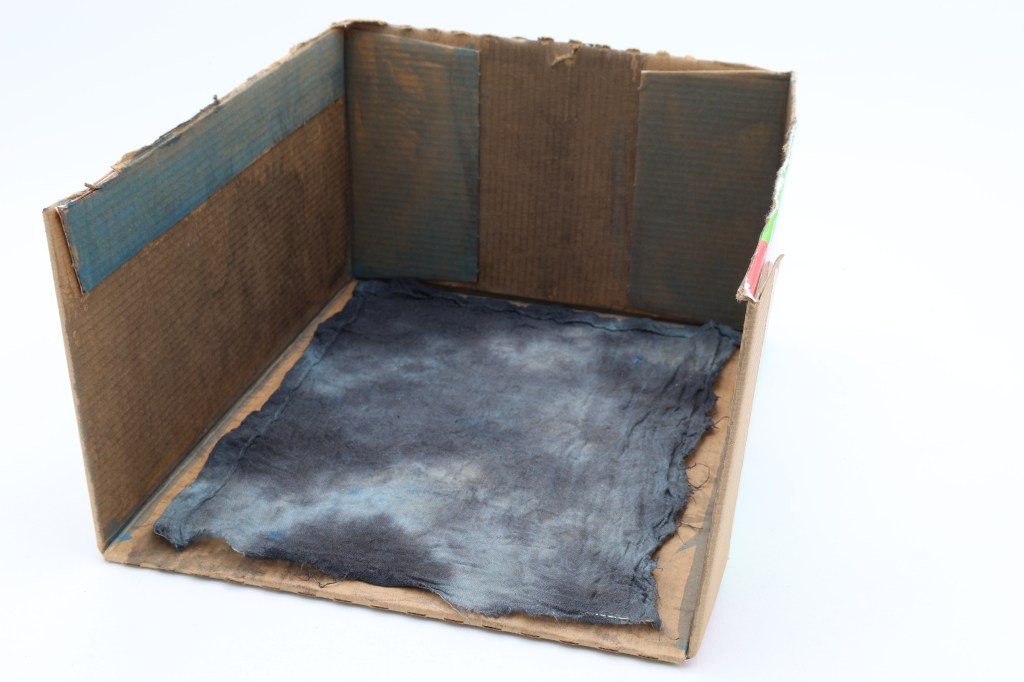

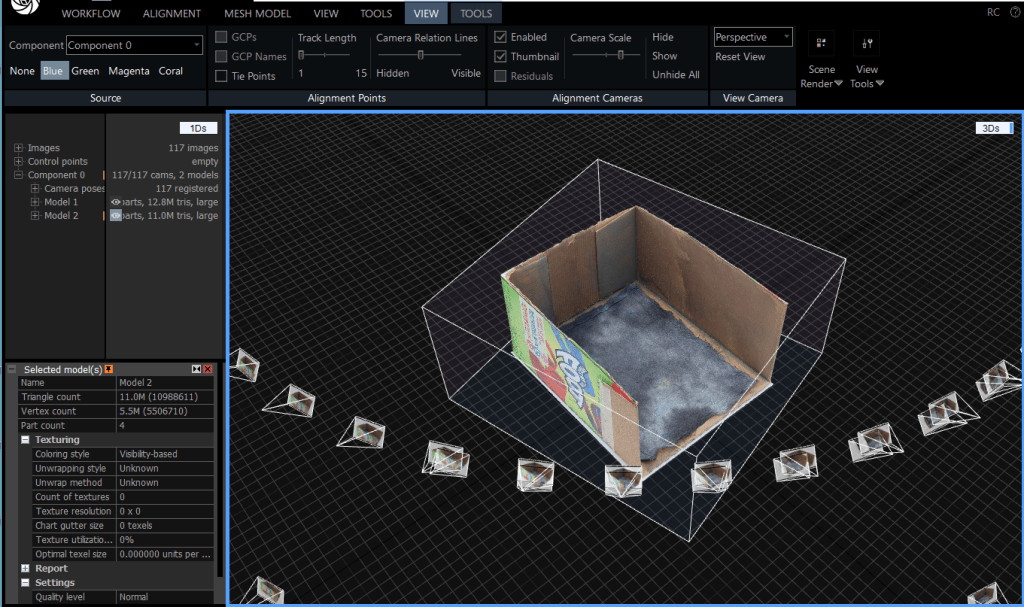

I wanted to get a small environment built and captured so I can start my prototype. I built a real basic diorama scene out of stuff I found in my studio (isn’t most surrealist stop motion found objects anyway?) I’ve been working more with a white infinity background in my box (seen on the table in the picture) The lighting has been coming out better capturing against white, but it can be hard to get it looking like its on the infinity background.

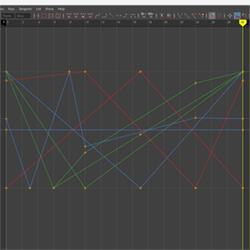

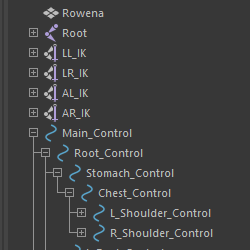

I am trying to make the jump to Blender from Maya. I usually do my photogrammetry contract work using ZBrush and Blender for cleanup. Because I wanted to do it quick, I did resort of using Maya to create a low poly so it would work better in VR. I used Substance Painter to do my high to low bake for the material.

I had the chair and table model from a previous experimental project using photogrammetry so I could repurpose them! Out of all the processes I’m going to be dealing with, the capture and cleanup of the assets is probably the process I am most confident about. I am still trying to work out some of the finer details like if it would have been better to capture the walls, floor, etc. individually and compose them together in Unity. It would be slower and require more captures, but the quality would be higher.

Leave a comment